This is a short blog series on using Power BI tools to support testing ETL processes. I have presented on this subject at few SQL Saturdays over the past few years and am finally succumbing to multiple request to turn it into a blog post. Realizing the amount of content is more than I typically would put into a single post, I will be putting together this short series to cover the material. The first post is this one. It will walk through the entire process at a high level. I will follow this post with a deeper look at Power Query’s role in the process. The third post will cover Power Pivot and building out test cases. Finally, we will wrap the series up with some visualization ideas for Excel and Power View. You can find all the posts as they come online here. Let’s get started.

This is a short blog series on using Power BI tools to support testing ETL processes. I have presented on this subject at few SQL Saturdays over the past few years and am finally succumbing to multiple request to turn it into a blog post. Realizing the amount of content is more than I typically would put into a single post, I will be putting together this short series to cover the material. The first post is this one. It will walk through the entire process at a high level. I will follow this post with a deeper look at Power Query’s role in the process. The third post will cover Power Pivot and building out test cases. Finally, we will wrap the series up with some visualization ideas for Excel and Power View. You can find all the posts as they come online here. Let’s get started.

The Problem Area

Why use Power BI to test ETL? While working as the architect on an ETL project for moving data from third party web service to an on-premise financial solution, we needed to put together a testing strategy that could be implemented by non-developers on the project. Our situation was that our project was “too small” to engage our QA team but the requirement for reusable testing needed to be fulfilled. Our project team consisted of a BI architect (that would be me), an ETL developer, and a business analyst (Chuck Whittemore).

NOTE: We are testing the data transformations and data load. This is not intended for auditing or performance. There are other tools for reviewing those including the built in reporting in SSIS and Pragmatic Works’ BI xPress tool. If you are tracking whether a package fails or succeeds, you should use either of these options not this process.

The Big Idea

The BA and I were discussing options for testing and we theorized that we could use a new add-in for Excel (Power Query, still in preview at  the time) with Power Pivot to build out tests. The key to success on this project is that we needed to be able to test with non-developer tools, no SQL Server Management Studio or SSIS could be involved in the testing. The primary reason for this is that he would be doing the testing. We also did not want to recreate every step in the ETL process the same way. So, time to put theory into practice. We determined that we would create test cases in Visual Studio then build out tests to match those cases in Excel using the Power BI add-ins. He would do the work in Excel and we, the developer and I, would provide technical support as needed.

the time) with Power Pivot to build out tests. The key to success on this project is that we needed to be able to test with non-developer tools, no SQL Server Management Studio or SSIS could be involved in the testing. The primary reason for this is that he would be doing the testing. We also did not want to recreate every step in the ETL process the same way. So, time to put theory into practice. We determined that we would create test cases in Visual Studio then build out tests to match those cases in Excel using the Power BI add-ins. He would do the work in Excel and we, the developer and I, would provide technical support as needed.

The Recommended Tools

Before we dig into the process, I want to lay out the tools used for development and for testing. While this solution can use other tools, it is important to know what we used in practice to create our solution.

ETL Development Tools

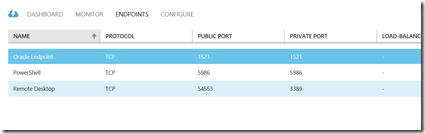

The ETL development was done using SQL Server Integration Services (SSIS). At the time, we needed to use Script tasks to consume the web service content. The financial system used a custom load process that we dumped formatted data into a file for the system to pick up and load.

The ETL development was done using SQL Server Integration Services (SSIS). At the time, we needed to use Script tasks to consume the web service content. The financial system used a custom load process that we dumped formatted data into a file for the system to pick up and load.

In the examples, I use in the presentations and will lay out here, I will be using a text file to SQL Server implementation. While complex ETL problems are common and hard to test, this simplified version is easier to follow in examples. You should be able to apply the principles used here to test any solution.

Testing Tools

The testing development for the referenced project consisted of Excel with Power Query and Power Pivot. Power Query was in preview at the time, so we had some of the performance issues and early bugs to work through. None of these issues, prevented us from completing the project.

The testing development for the referenced project consisted of Excel with Power Query and Power Pivot. Power Query was in preview at the time, so we had some of the performance issues and early bugs to work through. None of these issues, prevented us from completing the project.

The presentation solution relies on the latest version of Power Query (which changes every month) and Power Pivot in Excel 2013. Most of the examples are easy to follow, but you should be able to solve most transformation tests with the combination of Power Query and Power Pivot. Definitely do not discount the capabilities of Power Query and the fact that new functionality is being added each month.

Team Foundation Server/Visual Studio Online

Both projects use the online version of TFS. If you are currently not using a source control and work tracking solution, I highly recommend you look at the online version of TFS. It will allow you up to 5 users free and give you ability to use source control, create test plans, create test cases, log bugs and track changes. These are key features necessary to complete a good solution that can be managed and tracked.

Both projects use the online version of TFS. If you are currently not using a source control and work tracking solution, I highly recommend you look at the online version of TFS. It will allow you up to 5 users free and give you ability to use source control, create test plans, create test cases, log bugs and track changes. These are key features necessary to complete a good solution that can be managed and tracked.

The Process

I am going to walk through my demo to build out the process steps. This will allow you to see examples. I will call out any thing of relevance related to the project here as well.

1. Business Rules

The first part of any project, especially in ETL, is to understand the business rules. If you are working with a data warehouse project, this may be fairly well documented in a dimensional model. In both of our cases here, we are moving data from one system to another. The transformations and business rules are primarily driven by the target system. Here are some examples of business rules in the media library sample project.

- Author names are stored in separate columns – FirstName and LastName

- If an author’s name include a middle name or initial or some variation, this combination should be stored in the first name column. For example, J.R.R. Tolkien would be stored as follows:

— FirstName: J.R.R.

— LastName: Tolkien

- Copyright year should be stored as a 4 digit value

- Page numbers should not exceed 1000

Every project has some type of business rules. It is hard to build out transformations and create test cases without these rules.

2. Source to Target Map

This is the single most important document for the tester. It tells the tester how the developer is getting from source to destination and what type of data massaging needs to be handled. Typically, people use some variation of the example created by the Kimball Group over the years.

3. Developing SSIS

The developer begins the process of creating the SSIS package. He will be using the Source to Target Map as his guide and will update that document to handle special cases in the data as needed. Ideally he is working in a development environment that will allow for test build outs as well.

4. Creating Test Plans and Test Cases

The tester creates test plans and test cases in TFS. These tests are based on business rules and the source to target map. Depending on both the complexity of the solution and the time to develop, some test cases could be did the table move the correct data field for field and row count. This method can be particularly useful when working with large tables or simple data flows. However, you should have a test case for every transformation that massages the data. This will insure that the data is being transformed as expected.

Keep in mind, this solution will support test cases for each field in a data load if required. The tester and architect should evaluate what is the appropriate amount of coverage to guarantee the highest level of quality in the data transform. As always, there is a diminishing rate of return if you “test everything” at the lowest level. It will be expensive in terms of cost of development when the chance for error is minimal. It will also take substantially longer to test everything. You need to understand and be able to articulate how the testing was accomplished and your level of confidence in the results.

5. Building the Tests

This is the most extensive part of the process besides the SSIS development. I will not go into all the details here, but will walk through the overall process and principles. I will provide detailed examples in the follow up posts as noted above.

Let’s start with the end result. Chuck and I were able to determine that we could use DAX to create comparative formulas on data that could be brought into Power Pivot from both the source and the destination. Essentially, we wanted to use math to determine the results of the tests. So in our example, we use a formula like “if Source.CopyrightYear = Destination.CopyrightYear, then it passes, else it fails.” Depending on how you want to measure, pass could be 0 or 1. Then we add the values up to determine if data passed or failed the test. We can even tell you failure rates.

In order to get the data in a comparative state, we needed each table in the destination with a table that matched from the source. However, it is very common that sources and destinations are not one-for-one table matches. This is where Power Query comes in. Using Power Query in our example set we bring in the text file and massage or shape the data to look like the destination. Most importantly, we need to apply all business rules and transformations to the source. Once this is done, we do no massaging on the destination data. This allows us to compare what the ETL process did with what our tests say it should have done.

A key part of being able to compare is the ability to relate the two tables in Power Pivot. You need to be able to match natural keys or derived keys between the two sources. The relationship should be from the destination table to the source table. Without this relationship, you will not be able to build the calculations for the tests. Keep in mind the goal is to get our source to look like expected results. Any data in the destination should match the source in our scenario.

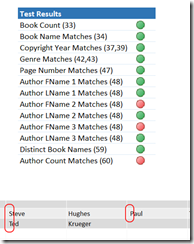

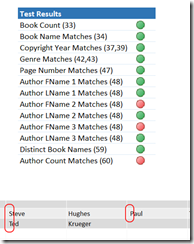

Once both tables are created and loaded into Power Pivot, we can complete the tests using DAX. In some cases, we create calculations on both tables to be compared. A classic example is row count. We count the number of rows in the source table and the destination table. Then we create a calculation on the destination to compare values. This meets the requirements of a row count test case (e.g. all data was successfully imported).

Another example of a test is to compare the content in a field from source to destination. This is where we use a lot of conditional logic to verify the contents of a field in a row is the same in both tables. Calculated columns (not measures) are used to create the comparison results. The conditional statement should result in a number. This is important in order to create a measure that sums up the results to determine if errors exist or not. If you choose success to be 1, then you will check your results against the row count to determine if there are errors. If you choose failure to be 1, then a nonzero count means you have errors. There is no right or wrong way to handle this, you would choose based on visualization techniques. Most of the time, using 1 for failures is fine. However, if you want to create KPIs, you will likely need success to be one so you have a good target to work with.

6. Testing the Initial Load

Once you have created the tests, you are ready to test the initial load. You will connect to both sources. Ideally, your source will not change so you can redo the test multiple times, but this will work regardless. Refresh the data which may require rerunning the Power Query query. Once you have refreshed the data you should be able to check the calculations in a simple pivot table to determine what tests have succeeded or failed. This is the beauty of this solution. Each subsequent execution of SSIS, you will be able to refresh your data and review your results to determine how successful the ETL is.

A side effect of this work is that the developer can review the test results in Excel and Power Pivot with you to more easily find the discrepancy in the data transform. In some cases, the tests are in error as well. It is important that the developer and tester work together to determine cause as well. A good team will be able to work through issues rather quickly.

7. Recording Bugs and Issues

You will need to go back to Visual Studio to change the pass/fail for each test. If a test fails you can log a bug for the developer and you that information to determine if it was fixed prior to a subsequent run. It is likely that multiple sprints will be required to complete the work so you can work with your team to determine the best ways to communicate what is ready. If you track the work in TFS, you will queries are available to help you see what work has been completed.

You can determine if the fix worked and then set the test results accordingly. This will help show progress on the project as well.

8. Visualizing the Results

You can visualize your results using KPIs, conditional formatting and even Power View. If you have a project that needs to be easily evaluated you can publish your results to SharePoint and use charts and graphs to show how accurate the process is so far.

We will dig into visualization options more in a following blog post.

Tracking Test History

No solution is perfect and that is true here as well. One of the most common questions is how do we see the historical results? This solution does not easily provide for that. I am looking at options, but for the moment the idea is that the history will be tracked through TFS. However, you could save the workbook after each iteration. This will give you some history, but you would want to make sure that you don’t refresh data on a historical workbook or the results would be overwritten.

Some final thoughts.

Power Query is not an ETL tool. It’s target destination is always the same – Power Pivot. While it’s ease of use makes it appear to be a tool to be used for ETL, it is not there yet. However, it is in its ease of use that we have a place to work with it here.

My plan is to have some deeper technical dives into parts of the solution in the future.