One of the tasks, we often do with migration projects is move large volumes of data. Depending on how you are configured, you may need to do the migration project in a development or UAT environment as opposed to a production environment. This is particularly true if you have policies in place on your production subscription that don’t allow the individuals doing the migration and validation tasks to work in that subscription.

Just Copy It… Nope

So you can copy Azure SQL Database using the Azure Portal, PowerShell, Azure CLI, and T-SQL. However, this functionality is limited to Azure SQL Database and does not work for Azure Synapse databases (a.k.a. SQL Pools). Early in 2021, the ability to use the copy functionality to copy databases between subscriptions is also supported but requires security work to make sure the permissions in the database servers and networking allow that to happen.

You can find out more about copying Azure SQL Database in this Microsoft Doc.

Just Restore It… Nope

You can restore to the current server or another server on the same subscription. However, you are unable to restore across subscription boundaries at this time. If you need to move to another server in the current subscription, the process is straightforward, you can use the restore process in Synapse to restore to the current server using a different name. You can also restore to a different server in either the current or different resource group in the same subscription. The restore technique is used in our move process, so details on how to restore a Synapse database will be in the next section.

Let’s Move a Synapse Database

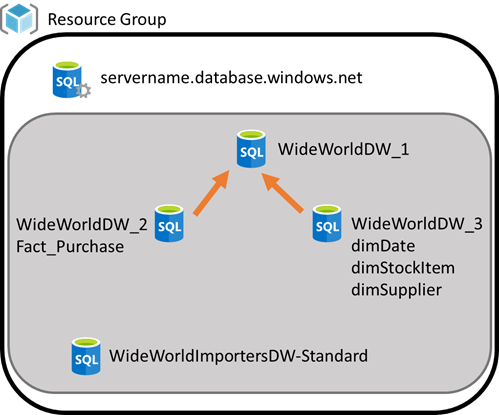

The process to move a Synapse database to another subscription requires some planning and pre-work. The first thing you need to do is create a new SQL Server in the same subscription you have your current Synapse environment. Because you can’t simply create servers, I would recommend that you add an Azure SQL Database to the server as a placeholder. An S0 should be sufficient to keep this server in place for what we are doing. DO NOT ADD anything to this server that will not be migrated. This is a temporary holding place for migrating databases. (This also works for other SQL Databases, but other options may work as well but are not the focus of this post.)

Now that you have the migration server created, the next step is to create a restore point. While this is not required because you can use the automatic restore points, creating a user-defined restore point is recommended. A user-defined restore point, allows you to choose the status of the database you want to migrate, rather than relying on the automatic points and trying to make sure you pick the right time (in UTC of course).

Once you have set the restore point, in the database you want to migrate, select Restore to open the panel to restore your database.

On the restore page, you have a number of options to complete.

- Restore point type: Choose User-defined restore points

- SQL pool name: This is not a big deal. The name is the database name used during the migration process and is not the final name used in the target server. Make sure it is something you know.

- Restore point: Select the restore point you created for this purpose.

- Server: Choose the migration server you created as the target.

- Performance level: This one is more interesting. I typically choose a smaller performance level for this restore. Keep in mind that Azure needs to allocate resources to support the restore. Because this is not a final deployment, smaller may go faster. However, NO SLAs exist for this process. That means your mileage will vary. We have seen restores happen in 30 minutes one day and over 5 hours the next. It will be very dependent on the data center and how busy it is. This time variation must be accounted for in your planning.

The next step is to move the server using the Move operation on the server page. You have the option to move to another resource group or another subscription. In our case, we will choose another subscription. IMPORTANT: You will need Contributor permissions in the target subscription in order to move the server to that subscription.

After you have moved the server to the target subscription, you need to set a restore point for that database on the migration server. Then you can restore that database to the target server. It is very important that you use the naming convention and performance levels that you need for this restore as it is the final step in the process. Once again the restore process has no Microsoft SLA and as a result may take longer than planned. You need to have contingencies in place if you are working in a deployment window or have time restrictions.

Finally, you need to clean up the migration server. I would recommend either scaling down or pausing the Synapse database to give you a backup for a while if needed. Once the database is validated on the target server, you can remove the Synapse database (removes storage costs). I would recommend keeping this server as your migration server to use in the future. You can use this process to create copies of databases for development and UAT or similar needs from production instances.

Other Thoughts and Considerations

Here are my final thoughts on this process. First, the fact that no SLA on the restore process is provided by Microsoft has created issues for us in some cases. We have had to extend deployment windows during production deployments on occasion. My recommendation is to plan for the worst case and finish early if all comes together on time.

This process works! You can use it with other SQL assets and you can use it in multiple directions. Keep the migration server around so you can support other processes. If you clean most of it out, the cost of maintaining it is the S0 SQL Database.

One final thought, this is Azure. Thus, this guidance could change tomorrow. We have been using this for about 12 months when this was written. I hope this helps some of you move these databases to support your business and development needs.