This post is a continuation of my adventure into the Tabular Object Model and how we can use it to make Power BI scalable and incorporate it into existing .NET applications. Quick refresher, the Tabular Object Model can be accessed through the XMLA endpoint in Power BI Premium workspaces. My previous posts have covered code around adding, deleting, and adjusting columns and measures, but this one will address how to manipulate hierarchies.

Power BI hierarchies are a powerful and easy way to enable end users to dig deeper into their visuals and data. While hierarchies can be a useful resource for self-serve analytics, maintaining hierarchies can be a pain as new levels get added or removed. Thankfully, if you have Power BI premium you can use the XMLA endpoint to add code into existing .NET applications to dynamically add or remove levels from hierarchies as they are created/removed in your application.

Unfortunately, while we can manipulate, add, and delete hierarchies and their levels, visuals already containing the hierarchy will not be automatically adjusted with any new levels/ordinals.

If you are new to using C# and the Tabular Object Model (TOM), please check out the previous blog post (https://dataonwheels.wordpress.com/2021/10/15/power-bi-meets-programmability-tom-xmla-and-c/) for both an introduction to the topic and detailed instructions on getting the C# portion of this demo stood up. Please reference the DataOnWheels GitHub page for sample PBIX files and C# packages, but note you will need a Power BI Premium workspace with XMLA endpoint write-back enabled in order to run this entire demo.

Power BI Hierarchies

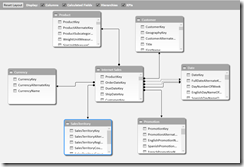

To start out, let’s make sure we understand the components of a hierarchy that we will need to replicate using our TOM script. In the Power BI Desktop app, creating a hierarchy is fairly simple. For example, let’s say I want to have end users drill down from category to subcategory. To do this, I would hover over the category column then click on the three dots next to the category column and select “create hierarchy”.

Next, go to the subcategory column and you’ll notice a new option called “add to existing hierarchy”. Select our newly created hierarchy (default will be named after the top level in the hierarchy), and it will add subcategory underneath category within the hierarchy. Pretty neat stuff but also very manual.

From this, we can see that there are a few components to a hierarchy that we will need to address in our TOM script:

1. Name

2. Levels

3. Order of levels (Ordinal)

4. Column in each level

5. Name of level

Using TOM to See Hierarchies, Levels, Ordinals, and Source Columns

Now that the data model contains a hierarchy, we can publish it up to a Premium enabled workspace in the Power BI service and see it using our TOM script. I won’t go into details on building out this script from scratch, so please reference this blog post for a complete walk through on connecting to your workspace and building a simple C# application to use with this demo.

To list out the hierarchies in the data model, you will need something like this script in your code (entire zip file is in the DataOnWheels github for reference):

// List out the hierarchies in the product table

foreach (Hierarchy hierarchy in table_product.Hierarchies)

{

Console.WriteLine($"Hierarchies: {hierarchy.Name}");

}

And poof there it is! Our Category Hierarchy! Next we will have our script list out the levels within the hierarchy.

// List out the levels in our Category hierarchy

Hierarchy hierarchy_category = table_product.Hierarchies["Category Hierarchy"];

foreach (Level level_categoryhierarchy in hierarchy_category.Levels)

{

Console.WriteLine($"Category Hierarchy Levels: {level_categoryhierarchy.Name}");

}

Great, and the next piece will be the ordinal, or the order that the hierarchy levels should be placed. I’m going to adjust the last code so now it will tell us the ordinal/order of each level before it gives us the name. Notice that this starts at 0, not 1.

// List out the levels in our Category hierarchy

Hierarchy hierarchy_category = table_product.Hierarchies["Category Hierarchy"];

foreach (Level level_categoryhierarchy in hierarchy_category.Levels)

{

Console.WriteLine($"Category Hierarchy Level {level_categoryhierarchy.Ordinal}: {level_categoryhierarchy.Name}");

}

And for our final piece of the puzzle, the column name that this level of the hierarchy comes from.

// List out the levels in our Category hierarchy

Hierarchy hierarchy_category = table_product.Hierarchies["Category Hierarchy"];

foreach (Level level_categoryhierarchy in hierarchy_category.Levels)

{

Console.WriteLine($"Category Hierarchy Level {level_categoryhierarchy.Ordinal}: {level_categoryhierarchy.Name} from {level_categoryhierarchy.Column.Name}");

}

Editing a Hierarchy Using TOM

Let’s switch it up and begin editing our existing hierarchy by changing the name of the hierarchy, the name of the levels, the source columns, and swap the ordinances. Typically you will not need to do any or all of these things, but it may be useful in rare use cases.

To start, we will rename the hierarchy itself. Now it will be important to reference the Category Hierarchy by the lineage tag after we rename it. The lineage tag won’t change even after you change the name property of the hierarchy itself. Please note your lineage tag will be different from mine, so run the script that will list the lineage tag next to the name (below) first then replace that portion in the rest of the code where there are references to the reference tag.

// List out the hierarchies in the product table

foreach (Hierarchy hierarchy in table_product.Hierarchies)

{

Console.WriteLine($"Hierarchies: {hierarchy.Name}, Lineage Tag = {hierarchy.LineageTag}");

}

// List out the levels in our category hierarchy

Hierarchy hierarchy_category = table_product.Hierarchies.FindByLineageTag("9aeadacd-d48d-48cb-948f-16700e030fe7");

foreach (Level level_categoryhierarchy in hierarchy_category.Levels)

{

Console.WriteLine($"Category Hierarchy Level {level_categoryhierarchy.Ordinal}: {level_categoryhierarchy.Name} from {level_categoryhierarchy.Column.Name}");

}

In the Power BI service, we can check if this rename effort was successful by entering edit mode.

Success! Let’s try changing the name of a level next then swap the order around.

//Hierarchies:

//Editing an existing hierarchy originally called Category Hierarchy

{

hierarchy_category.Name = "Category Hierarchy Rename Test"; //this renames the hierarchy, note the lineage tag will remain unchanged

Console.WriteLine($"Category Hierarchy Renamed");

}

//Editing an existing hierarchy level

Level level_Category = hierarchy_category.Levels.FindByLineageTag("fe12a6fc-1023-43f9-bfdc-c59f65435323");

Level level_Subcategory = hierarchy_category.Levels.FindByLineageTag("fbb4aa00-35dc-4490-bc40-3190b354ea54");

{

level_Category.Name = "Category Test";

level_Subcategory.Name = "Subcategory Test";

Console.WriteLine($"Category Hierarchy Levels Renamed");

Awesome! Okay now for the final piece of the puzzle – switching the ordinances to make subcategory the top of the hierarchy. Note, you will need to start at level 0. Also, if you are experiencing errors in saving the model, make sure you are out of edit mode in the Power BI Service. While it’s helpful to be in that mode to see your changes, it will be impossible to make additional changes via XMLA until you are out of it.

//Hierarchies:

//Editing an existing hierarchy originally called Category Hierarchy

{

hierarchy_category.Name = "Category Hierarchy Rename Test"; //this renames the hierarchy, note the lineage tag will remain unchanged

Console.WriteLine($"Category Hierarchy Renamed");

}

//Editing an existing hierarchy level

Level level_Category = hierarchy_category.Levels.FindByLineageTag("fe12a6fc-1023-43f9-bfdc-c59f65435323");

Level level_Subcategory = hierarchy_category.Levels.FindByLineageTag("fbb4aa00-35dc-4490-bc40-3190b354ea54");

{

level_Category.Name = "Category Test";

level_Category.Ordinal = 1;

level_Subcategory.Name = "Subcategory Test";

level_Subcategory.Ordinal = 0;

Console.WriteLine($"Category Hierarchy Levels Renamed & Reordered");

}

// List out the levels in our category hierarchy

foreach (Level level_categoryhierarchy in hierarchy_category.Levels)

{

Console.WriteLine($"Category Hierarchy Level {level_categoryhierarchy.Ordinal}: {level_categoryhierarchy.Name} Lineage Tag: {level_categoryhierarchy.LineageTag} from {level_categoryhierarchy.Column.Name}");

}

Boom now we have proven we can reorder the levels as well as rename them and the hierarchy itself.

Adding Hierarchy Levels & Hierarchies via TOM

Now we are finally ready to add a brand new level into our hierarchy! In the sample data, the model column should go below subcategory in my hierarchy. To add a level to the hierarchy we will need a few items – the name of the level, the ordering of the level, and the column it should reference. You can add a lineage tag as well (Power BI will not add one unless you made this level in the desktop application). Don’t forget to add the level you’ve created to the hierarchy or else it will stay in cache and never get added.

//Hierarchies:

//Editing an existing hierarchy originally called Category Hierarchy

{

hierarchy_category.Name = "Category Hierarchy Rename"; //this renames the hierarchy, note the lineage tag will remain unchanged

Console.WriteLine($"Category Hierarchy Renamed");

}

//Editing an existing hierarchy level

Level level_Category = hierarchy_category.Levels.FindByLineageTag("fe12a6fc-1023-43f9-bfdc-c59f65435323");

Level level_Subcategory = hierarchy_category.Levels.FindByLineageTag("fbb4aa00-35dc-4490-bc40-3190b354ea54");

{

level_Category.Name = "Category";

level_Category.Ordinal = 1;

level_Subcategory.Name = "Subcategory";

level_Subcategory.Ordinal = 0;

Console.WriteLine($"Category Hierarchy Levels Renamed & Reordered");

}

//Adding a new level to the hierarchy if it doesn't already exist

if (hierarchy_category.Levels.ContainsName("Model"))

{

Console.WriteLine($"Hierarchy Level Exists");

}

else

{

Level level_Model = new Level()

{

Name = "Model",

Ordinal = 2,

Column = table_product.Columns.Find("Model")

};

hierarchy_category.Levels.Add(level_Model);

Console.WriteLine($"Hierarchy Level Added");

Let’s try making our own hierarchy from scratch. To review, we will need to have a name for our new hierarchy, the name of the levels, the order of the levels, and the column of the levels. We will also need to explicitly add the new hierarchy to the model then add the levels to that hierarchy.

//Add a new hierarchy if it doesn't already exist

if (table_product.Hierarchies.ContainsName("New Hierarchy"))

{

Console.WriteLine($"New Hierarchy Exists");

}

else

{

Hierarchy hiearchy_new = new Hierarchy()

{

Name = "New Hierarchy",

};

table_product.Hierarchies.Add(hiearchy_new);

Console.WriteLine($"Hierarchy Added");

Level level_one = new Level()

{

Name = "Model",

Ordinal = 0,

Column = table_product.Columns.Find("Model")

};

Level level_two = new Level()

{

Name = "Product",

Ordinal = 1,

Column = table_product.Columns.Find("Product")

};

hiearchy_new.Levels.Add(level_one);

hiearchy_new.Levels.Add(level_two);

Console.WriteLine($"Levels added to new hiearchy");

};

Awesome! Now we know we can programmatically add hierarchies, add levels, rearrange levels, rename levels, and point levels to different columns. This won’t apply to many use cases of Power BI, but for those of you embedding a Power BI solution into your application, this should offer greater flexibility and integration with your existing .NET applications.

Additional Resources:

- https://docs.microsoft.com/en-us/analysis-services/tom/tom-pbi-datasets?view=asallproducts-allversions

- https://powerbidocs.com/2019/12/21/power-bi-hierarchies/

- https://docs.microsoft.com/en-us/dotnet/api/microsoft.analysisservices.tabular.hierarchy?view=analysisservices-dotnet

- https://docs.microsoft.com/en-us/dotnet/api/microsoft.analysisservices.tabular.level?view=analysisservices-dotnet

- https://docs.microsoft.com/en-us/dotnet/api/microsoft.analysisservices.tabular.level.ordinal?view=analysisservices-dotnet#microsoft-analysisservices-tabular-level-ordinal

- https://github.com/Anytsirk12/DataOnWheels

As I mentioned in my original post,

As I mentioned in my original post,